Thread Pool Pattern to the Rescue in Heavy-Computing React Applications

I say “queues” — you think backend or microservices. I say “threads” — you think backend again. That’s normal. Some patterns naturally live in certain corners of our minds, and that’s fine — I’m no different. The key is being able to use your experience and familiarity with these patterns in other areas of software development.

In this post, I’ll show how the Worker Threads Pool pattern can be applied in a React application to significantly optimize heavy computation tasks. The truth is that most React apps run entirely on the main thread, because that’s simply how JavaScript works by default.

I touched on the topic of offloading heavy CPU tasks to a Web Worker in my previous article , where I presented a React example running face-recognition and face-detection inference entirely in the browser. This time, I’d like to optimize that example by applying the Thread Pool pattern.

Before diving into the code, let’s take a look at a high-level explanation of the pattern.

What Is The Thread Pool Pattern

The Thread Pool Pattern is a design pattern in which a fixed number of worker threads is prepared to execute tasks. Tasks are dispatched to these workers, and when all workers are busy, new tasks are placed into a queue. As soon as any worker becomes available, the next task is taken from the queue and assigned to it. Tasks are usually taken from the queue using the FIFO (first in, first out) approach.

Existing Code Overview

The application we’re going to optimize is a React app that takes an example image containing sample faces and a set of additional images. Every face that matches the faces from the example image is blurred.

The app’s logic consists of two main modules:

useFace— a hook that contains the logic for calling the inference performed inside a Web Worker (a separate thread). It acts as the glue between the worker and the React state:

typescript

import { useEffect, useRef, useState } from "react";

import * as faceapi from "face-api.js";

import * as Comlink from "comlink";

import type { FaceDetectionWorker } from "../types";

async function getImageWithDetections(

allExampleFaces: Float32Array[],

targetImageData: { id: number; src: string },

detector: (transferObj: {

allExampleFaces: Float32Array[];

allTargetImages: ArrayBuffer;

}) => Promise<

faceapi.WithFaceDescriptor<

faceapi.WithFaceLandmarks<

{ detection: faceapi.FaceDetection },

faceapi.FaceLandmarks68

>

>[]

>,

) {

const { id, src } = targetImageData;

const arrayBuffer = await (await fetch(src)).arrayBuffer();

const arrayBufferForDetector = arrayBuffer.slice(0);

const payload = {

allExampleFaces,

allTargetImages: arrayBufferForDetector,

};

const matchedDescriptors = await detector(payload);

return {

id,

src,

imgElement: arrayBuffer,

detections: matchedDescriptors,

};

}

function useFace() {

const [exampleImage, setExampleImage] = useState<string | null>(null);

const [targetImages, setTargetImages] = useState<string[]>([]);

const [isLoading, setIsLoading] = useState(false);

const [error, setError] = useState<string | null>(null);

const [outputImages, setOutputImages] = useState<string[]>([]);

const workerRef = useRef<Worker | null>(null);

useEffect(() => {

const runWorker = async () => {

const worker = new Worker(

new URL("../face-detection.worker", import.meta.url),

{ type: "module" },

);

workerRef.current = worker;

};

runWorker().catch((error) => {

console.error("Error initializing worker:", error);

});

return () => {

workerRef.current?.terminate();

};

}, []);

const handleFace = async () => {

if (!exampleImage || !workerRef.current) {

return;

}

try {

console.time("face handler performance");

const api = Comlink.wrap<Comlink.Remote<FaceDetectionWorker>>(

workerRef.current as any,

);

setIsLoading(true);

setError(null);

const exampleArrayBuffer = await (

await fetch(exampleImage)

).arrayBuffer();

const allExampleFaces = await api.extractAllFaces(exampleArrayBuffer);

const targetImagesWithId = targetImages.map((targetImage, index) => ({

id: index,

src: targetImage,

}));

for (const targetImage of targetImagesWithId) {

const imageWithDescriptors = await getImageWithDetections(

allExampleFaces.map((face) => face.descriptor),

targetImage,

api.detectMatchingFaces,

);

const output = await api.drawOutputImage(imageWithDescriptors);

const url = URL.createObjectURL(output);

setOutputImages((prevState) => [...prevState, url]);

}

console.timeEnd("face handler performance");

} catch (err) {

setError("Error processing image");

console.error(err);

} finally {

setIsLoading(false);

}

};

return {

handleFace,

isLoading,

error,

outputImages,

loadExampleImage: setExampleImage,

loadTargetImages: setTargetImages,

exampleImage,

targetImages,

};

}

export default useFace;FaceDetectionWorker— a Web Worker class that does all the heavy lifting, such as loading models, running face recognition and face detection, and applying blurring.

typescript

import * as faceapi from "face-api.js";

import * as Comlink from "comlink";

import type {

DataTransfer,

FaceDetectionWorker,

ImageWithDescriptors,

} from "./types";

import { serializeFaceApiResult } from "./worker/serializers";

const MODEL_PATH = `/models`;

faceapi.env.setEnv(faceapi.env.createNodejsEnv());

faceapi.env.monkeyPatch({

//@ts-ignore

Canvas: OffscreenCanvas,

//@ts-ignore

createCanvasElement: () => {

return new OffscreenCanvas(480, 270);

},

});

const createCanvas = async (transferObj: DataTransfer) => {

try {

const buf = transferObj as ArrayBuffer | undefined;

if (!buf) {

return new OffscreenCanvas(20, 20);

}

const blob = new Blob([buf]);

const bitmap = await createImageBitmap(blob);

const canvas = new OffscreenCanvas(bitmap.width, bitmap.height);

const ctx = canvas.getContext("2d")!;

ctx.drawImage(bitmap, 0, 0, bitmap.width, bitmap.height);

return canvas;

} catch (error) {

console.error(

"Error creating image from buffer, using empty canvas instead",

error,

);

return new OffscreenCanvas(20, 20);

}

};

class WorkerClass implements FaceDetectionWorker {

async extractAllFaces(transferObj: DataTransfer) {

const canvas = await createCanvas(transferObj);

const detections = await faceapi

.detectAllFaces(canvas as unknown as faceapi.TNetInput)

.withFaceLandmarks()

.withFaceDescriptors();

return detections.map(serializeFaceApiResult);

}

async detectMatchingFaces(transferObj: {

allExampleFaces: Float32Array[];

allTargetImages: ArrayBuffer;

}) {

const allExampleFaces = transferObj.allExampleFaces;

const detections = await this.extractAllFaces(transferObj.allTargetImages);

const threshold = 0.5;

const matchedDescriptors = detections.filter(({ descriptor }) => {

return allExampleFaces.some((exampleDescriptor) => {

const distance = faceapi.euclideanDistance(

exampleDescriptor,

descriptor,

);

return distance < threshold;

});

});

return matchedDescriptors.map(serializeFaceApiResult);

}

async drawOutputImage(imageWithDescriptors: ImageWithDescriptors) {

const decodedCanvas = await createCanvas(imageWithDescriptors.imgElement);

const canvas = new OffscreenCanvas(

decodedCanvas.width,

decodedCanvas.height,

);

const ctxRes = canvas.getContext("2d", { willReadFrequently: true })!;

const detections = imageWithDescriptors.detections;

ctxRes.drawImage(

decodedCanvas as unknown as CanvasImageSource,

0,

0,

canvas.width,

canvas.height,

);

for (const detection of detections) {

const { x, y, width, height } = detection?.detection?.box;

const padding = 0.2;

const expandedX = Math.max(0, x - width * padding);

const expandedY = Math.max(0, y - height * padding);

const expandedWidth = Math.min(

canvas.width - expandedX,

width * (1 + 2 * padding),

);

const expandedHeight = Math.min(

canvas.height - expandedY,

height * (1 + 2 * padding),

);

for (let i = 0; i < 3; i++) {

ctxRes.filter = "blur(50px)";

ctxRes.drawImage(

decodedCanvas as unknown as CanvasImageSource,

expandedX,

expandedY,

expandedWidth,

expandedHeight,

expandedX,

expandedY,

expandedWidth,

expandedHeight,

);

}

ctxRes.filter = "none";

}

return canvas.convertToBlob();

}

}

async function loadModels() {

console.log("WorkerClass init...");

await Promise.all([

faceapi.nets.ssdMobilenetv1.loadFromUri(MODEL_PATH),

faceapi.nets.faceLandmark68Net.loadFromUri(MODEL_PATH),

faceapi.nets.faceRecognitionNet.loadFromUri(MODEL_PATH),

]);

console.log("worker initialized and models loaded");

}

(async () => {

await loadModels();

const worker = new WorkerClass();

Comlink.expose(worker);

})();The communication between the main thread and the worker is handled using the Comlink library, which provides a simple abstraction over Web Workers.

Optimizing the App With the Thread Pool Pattern

The current implementation solves the problem of UI freezing by offloading the AI inference. However, the inference could be faster by using the rest of the available threads.

WorkerPoolClass

First and foremost, we need to implement a class that will be responsible for maintaining a pool of workers, assigning tasks, and managing the queue.

At the start, our class needs to retrieve information from navigator.hardwareConcurrency to determine how many threads are available.

typescript

import * as Comlink from "comlink";

import type { FaceDetectionWorker } from "../types";

export class WorkerPool {

private workers: Comlink.Remote<FaceDetectionWorker>[] = [];

private availableWorkers: Set<number> = new Set();

private taskQueue: Task<any>[] = [];

private maxWorkers: number;

private workerInstances: Worker[] = [];

constructor(maxWorkers: number = navigator.hardwareConcurrency || 4) {

this.maxWorkers =

Math.max(1, Math.min(maxWorkers, navigator.hardwareConcurrency || 4)) - 1;

}

}Note that for safety reasons, it’s best to create one fewer worker than the number of available threads, since one of those threads will always be used by the main thread.

Then, in the init method, we need to spawn as many workers as the adjusted thread count allows.

typescript

export class WorkerPool {

// REST OF THE CODE

async init(): Promise<void> {

for (let i = 0; i < this.maxWorkers; i++) {

const worker = new Worker(

new URL("../face-detection.worker", import.meta.url),

{ type: "module" },

);

this.workerInstances.push(worker);

const wrappedWorker = Comlink.wrap<Comlink.Remote<FaceDetectionWorker>>(

worker as any,

);

this.workers.push(wrappedWorker);

this.availableWorkers.add(i);

}

}

// REST OF THE CODE

}Next, we need a method responsible for executing a single task.

typescript

interface Task<T> {

fn: (worker: Comlink.Remote<FaceDetectionWorker>) => Promise<T>;

resolve: (value: T) => void;

reject: (error: Error) => void;

}

export class WorkerPool {

private workers: Comlink.Remote<FaceDetectionWorker>[] = [];

private availableWorkers: Set<number> = new Set();

private taskQueue: Task<any>[] = [];

// REST OF THE CODE

private async executeTask<T>(

workerIndex: number,

task: Task<T>,

): Promise<void> {

try {

const result = await task.fn(this.workers[workerIndex]);

task.resolve(result);

} catch (error) {

task.reject(error instanceof Error ? error : new Error(String(error)));

} finally {

this.availableWorkers.add(workerIndex);

if (this.taskQueue.length <= 0) {

return;

}

const nextTask = this.taskQueue.shift();

if (nextTask) {

this.availableWorkers.delete(workerIndex);

this.executeTask(workerIndex, nextTask);

}

}

}

// REST OF THE CODE

}The executeTask method is a private method that takes two parameters. The first parameter, task, is an object containing three properties: fn, which returns a promise with a result described by a generic type, and resolve and reject, which handle the success and failure scenarios. These come from the Promise object, as the task is wrapped in a promise. The second parameter, workerIndex, is simply the index of the worker that is available to perform the current task. If there are any tasks in the queue, they will be pulled and passed to a recursive call of the parent function.

Now it’s time for the execute method, which is used to trigger the entire process of managing and executing tasks in the worker pool.

typescript

export class WorkerPool {

private workers: Comlink.Remote<FaceDetectionWorker>[] = [];

private availableWorkers: Set<number> = new Set();

private taskQueue: Task<any>[] = [];

// REST OF THE CODE

private async executeTask<T>(

workerIndex: number,

task: Task<T>,

): Promise<void> {

try {

const result = await task.fn(this.workers[workerIndex]);

task.resolve(result);

} catch (error) {

task.reject(error instanceof Error ? error : new Error(String(error)));

} finally {

this.availableWorkers.add(workerIndex);

if (this.taskQueue.length <= 0) {

return;

}

const nextTask = this.taskQueue.shift();

if (nextTask) {

this.availableWorkers.delete(workerIndex);

this.executeTask(workerIndex, nextTask);

}

}

}

async execute<T>(

fn: (worker: Comlink.Remote<FaceDetectionWorker>) => Promise<T>,

): Promise<T> {

return new Promise((resolve, reject) => {

if (this.availableWorkers.size > 0) {

const workerIndex = Array.from(this.availableWorkers)[0];

this.availableWorkers.delete(workerIndex);

this.executeTask(workerIndex, { fn, resolve, reject });

} else {

this.taskQueue.push({ fn, resolve, reject });

}

});

}

// REST OF THE CODE

}This method essentially works as a higher-order function. The selected worker instance is exposed by passing it to the fn callback. If a worker is ready to process the task, execution happens immediately; if not, the task is pushed to the queue.

The last useful method to include is the terminate method.

typescript

export class WorkerPool {

private workers: Comlink.Remote<FaceDetectionWorker>[] = [];

private availableWorkers: Set<number> = new Set();

private taskQueue: Task<any>[] = [];

// REST OF THE CODE

async terminate(): Promise<void> {

while (

this.taskQueue.length > 0 ||

this.availableWorkers.size < this.maxWorkers

) {

await new Promise((resolve) => setTimeout(resolve, 100));

}

this.workerInstances.forEach((worker) => worker.terminate());

this.workers = [];

this.availableWorkers.clear();

this.taskQueue = [];

}

// REST OF THE CODE

}The terminate method gracefully shuts down all the workers, waiting for all tasks to complete before finishing.

The complete module looks like this:

typescript

import * as comlink from "comlink";

import type { facedetectionworker } from "../types";

interface task<t> {

fn: (worker: comlink.remote<facedetectionworker>) => promise<t>;

resolve: (value: t) => void;

reject: (error: error) => void;

}

export class workerpool {

private workers: comlink.remote<facedetectionworker>[] = [];

private availableworkers: set<number> = new set();

private taskqueue: task<any>[] = [];

private maxworkers: number;

private workerinstances: worker[] = [];

constructor(maxworkers: number = navigator.hardwareconcurrency || 4) {

this.maxworkers = math.max(

1,

math.min(maxworkers, navigator.hardwareconcurrency || 4),

);

}

async init(): promise<void> {

for (let i = 0; i < this.maxworkers; i++) {

const worker = new worker(

new url("../face-detection.worker", import.meta.url),

{ type: "module" },

);

this.workerinstances.push(worker);

const wrappedworker = comlink.wrap<comlink.remote<facedetectionworker>>(

worker as any,

);

this.workers.push(wrappedworker);

this.availableworkers.add(i);

}

}

async execute<t>(

fn: (worker: comlink.remote<facedetectionworker>) => promise<t>,

): promise<t> {

return new promise((resolve, reject) => {

if (this.availableworkers.size > 0) {

const workerindex = array.from(this.availableworkers)[0];

this.availableworkers.delete(workerindex);

this.executetask(workerindex, { fn, resolve, reject });

} else {

this.taskqueue.push({ fn, resolve, reject });

}

});

}

private async executetask<t>(

workerindex: number,

task: task<t>,

): promise<void> {

try {

const result = await task.fn(this.workers[workerindex]);

task.resolve(result);

} catch (error) {

task.reject(error instanceof error ? error : new error(string(error)));

} finally {

this.availableworkers.add(workerindex);

if (this.taskqueue.length <= 0) {

return;

}

const nexttask = this.taskqueue.shift();

if (nexttask) {

this.availableworkers.delete(workerindex);

this.executetask(workerindex, nexttask);

}

}

}

async terminate(): promise<void> {

while (

this.taskqueue.length > 0 ||

this.availableworkers.size < this.maxworkers

) {

await new promise((resolve) => settimeout(resolve, 100));

}

this.workerinstances.foreach((worker) => worker.terminate());

this.workers = [];

this.availableworkers.clear();

this.taskqueue = [];

}

}useFace Hook

Now we need to adjust the useFace hook to call inference methods through the worker pool we created.

The first step is to replace the workerRef value, switching from a single concrete worker to an instance of the WorkerPool class.

typescript

import { useEffect, useRef, useState } from "react";

import { WorkerPool } from "./worker-pool";

function useFace() {

const workerPoolRef = useRef<WorkerPool | null>(null);

useEffect(() => {

const initializeWorkerPool = async () => {

const pool = new WorkerPool();

await pool.init();

workerPoolRef.current = pool;

};

initializeWorkerPool().catch((error) => {

console.error("Error initializing worker pool:", error);

});

return () => {

if (workerPoolRef.current) {

workerPoolRef.current.terminate();

}

};

}, []);

}We need to instantiate the WorkerPool and assign it to workerRef. It’s also important to remember to terminate the worker pool in the cleanup function.

Now, in the handleFace function, every task should be executed within the callback passed to the execute.

typescript

async function getImageWithDetections(

allExampleFaces: Float32Array[],

targetImageData: { id: number; src: string },

workerPool: WorkerPool,

) {

// REST OF THE CODE

const matchedDescriptors = await workerPool.execute((worker) =>

worker.detectMatchingFaces(payload),

);

// REST OF THE CODE

}

function useFace() {

const [exampleImage, setExampleImage] = useState<string | null>(null);

const [targetImages, setTargetImages] = useState<string[]>([]);

const [isLoading, setIsLoading] = useState(false);

const [error, setError] = useState<string | null>(null);

const [outputImages, setOutputImages] = useState<string[]>([]);

const workerPoolRef = useRef<WorkerPool | null>(null);

// REST OF THE CODE

const handleFace = async () => {

// REST OF THE CODE

const allExampleFaces = await workerPool.execute((worker) =>

worker.extractAllFaces(exampleArrayBuffer),

);

const processedImages = await Promise.all(

targetImagesWithId.map((targetImage) =>

getImageWithDetections(

allExampleFaces.map((face) => face.descriptor),

targetImage,

workerPool,

),

),

);

const outputPromises = processedImages.map((imageWithDescriptors) =>

workerPool.execute((worker) =>

worker.drawOutputImage(imageWithDescriptors),

),

);

// REST OF THE CODE

}Performance Comparison

Let’s run some tests to observe the difference between the optimized version and the original one. The tests will be conducted on a computer with a processor that has 8 threads, and inference will be performed on 20 images.

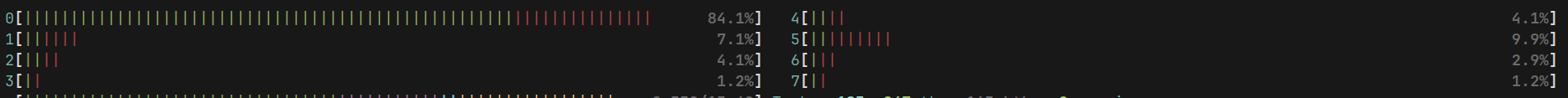

Original Version

Only one thread is used for inference. The UI is not blocked, and the app runs smoothly, but the entire process takes 71 seconds.

The CPU utilization is shown in the diagram from htop:

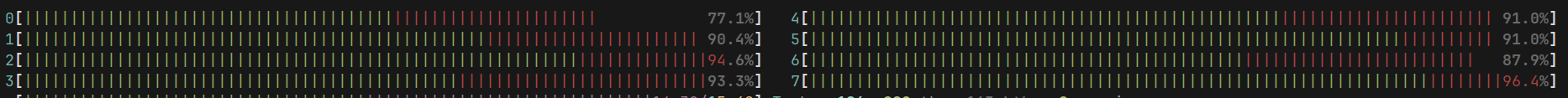

Optimized Version

The optimized version uses all available CPU threads. The improvement is significant: the entire process now takes 36 seconds.

We can also see the difference in the CPU usage diagram below:

Wrapping Up

Recently, many front-end developers using React talk about meta-frameworks, server components, and moving rendering from the client to the server. However, sometimes, in real-world scenarios, heavy-lifting tasks often still need to be performed on the client. This is where it pays off to take advantage of the features provided by the browser and the language itself. It’s always useful to be aware of the capabilities of the environment your language runs in.

I also believe we should look beyond our own field of programming. If you are dedicated to front-end development, explore backend concepts, and vice versa. Most patterns are not exclusive to one side; some are simply more commonly used in certain contexts.

I hope you enjoyed the post and learned something valuable from it. 🫡